Trigger warning: Computer junk ahead!

"Dot product" is the name of a common mathematical pattern. If you have two lists of numbers of equal length (called 'vectors') A and B, and you multiply each pair of numbers Ai * Bi, then add up the results of all the multiplications, you get the dot product:

A: 1, 2, 3, 4

B: 10, 20, 30, 40

A⋅B= 1x10 + 2x20 + 3x30 + 4x30 = 10 + 40 + 90 + 120 = 260

It shows up everywhere. And I see variations of it all the time in digital image processing, especially in 4-element situations, as with that little A & B example.

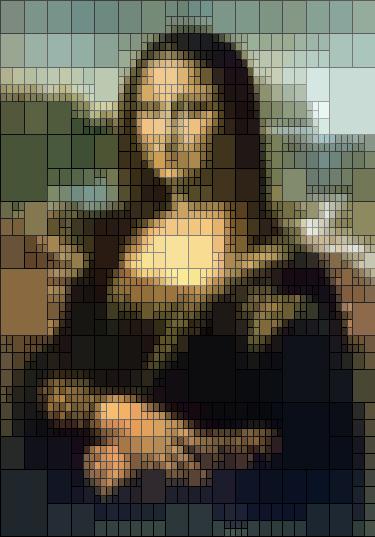

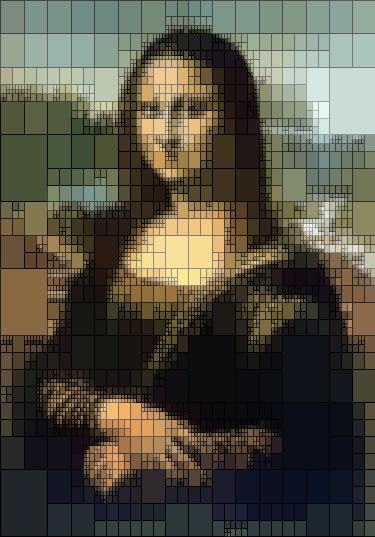

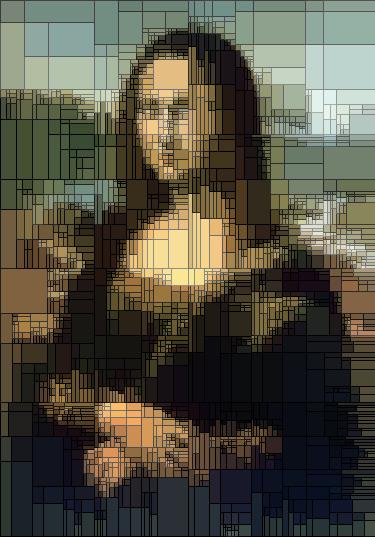

For example, deep in the heart of my bi-cubic interpolation code (a way to do image scaling), there is this bit of code which takes 16 pixel values and two vectors of coefficients (af and bf) four values each.

float dot_flt_native(float *pix, float *af, float *bf)

{

float t1 = af[0] * pix[00] + af[1] * pix[01] + af[2] * pix[02] + af[3] * pix[03];

float t2 = af[0] * pix[04] + af[1] * pix[05] + af[2] * pix[06] + af[3] * pix[07];

float t3 = af[0] * pix[08] + af[1] * pix[09] + af[2] * pix[10] + af[3] * pix[11];

float t4 = af[0] * pix[12] + af[1] * pix[13] + af[2] * pix[14] + af[3] * pix[15];

return res = bf[0] * t1 + bf[1] * t2 + bf[2] * t3 + bf[3] * t4;

}

It does five dot products. First, it does a dot product of the first four pixels and the 'af' vector, then another for the second 4 pix, and another for the third and fourth sets of pix. Those four dp's gives four intermediate results (t1,t2,t3,t4) which are then used in another dot product with the 'bf' vector. And that's the result. Twenty multiplications and fifteen additions.

Note the nice pattern in the way I've laid-out the code. Note the repetition: grab 4 pixels, multiple each by the corresponding item from af; Repeat; Repeat; Repeat. Different data pushed through the same simple process, repeated. Single Instruction, Multiple Data: SIMD.

Modern CPUs have the ability to do these parallel calculations in a single instructions: line your vectors up, let the CPU chomp away four (or eight or 16 or 32!) values at a time - for numerical accuracy reasons I can't use 8 or 16-bit integers, I have to use 32-bit floating point values in this calculation. As always, the trick with this stuff is to get the data lined up with as little fuss as possible - you don't want the time spent on setup to overwhelm any speedup you gain from being able to do parallel calculations. But, this data is trivial to line up. So, it's all about doing the calculation.

First method: as-is. Since I already have the code above, I can use that as the benchmark for accuracy and for speed. We'll call that the native floating point version.

Second method: what I really have. The version above uses floating point data as the input, but my actual data is integers and they have to be converted to floating point data, first. That's easy enough. And so this is my real benchmark.

float dot_int_native(unsigned char *pixb, float *af, float *bf)

{

float pix[16];

for (int i=0;i<16;i++) pix[i] = pixb[i];

float t1 = af[0] * pix[00] + af[1] * pix[01] + af[2] * pix[02] + af[3] * pix[03];

float t2 = af[0] * pix[04] + af[1] * pix[05] + af[2] * pix[06] + af[3] * pix[07];

float t3 = af[0] * pix[08] + af[1] * pix[09] + af[2] * pix[10] + af[3] * pix[11];

float t4 = af[0] * pix[12] + af[1] * pix[13] + af[2] * pix[14] + af[3] * pix[15];

return res = bf[0] * t1 + bf[1] * t2 + bf[2] * t3 + bf[3] * t4;

}

Same thing as before, but with a little loop to convert the input to floats. 20 multiplies, 15 adds, and now 16 conversions from integer to float (but how expensive can they be!?)

Now for an attempt at SIMD. It just so happens that there is a 'dot product' instruction in CPUs that support Intel's 'SSE4' tech. It does a dot product in one instruction. Line em up, pull the trigger, done. The only ugly part about this is that we have to pick out the results from the first four dot product instructions (_mm_dp_ps), then pack them into a new vector for the final dot product.

float dot_flt_sse_dpps(unsigned char *pixb, float *af, float *bf)

{

// same as above, convert integers to floats.

// use that ugly __declspec thing to align the data to

// 16 bytes, for SSE reasons

__declspec(align(16)) float pix[16];

for (int i=0;i<16;i++) pix[i] = pixb[i];

// load the coefficient vectors into SSE vectors

__m128 afx = _mm_load_ps(af);

__m128 bfx = _mm_load_ps(bf);

__m128 t1 = _mm_load_ps(&pix[0]); // load four pixels

t1 = _mm_dp_ps(t1, afx, 0xff); // dot product against afx vector

__m128 t2 = _mm_load_ps(&pix[4]); // load four more pixels

t2 = _mm_dp_ps(t2, afx, 0xff); // dot product against afx vector

__m128 t3 = _mm_load_ps(&pix[8]); // etc

t3 = _mm_dp_ps(t3, afx, 0xff);

__m128 t4 = _mm_load_ps(&pix[12]);// etc

t4 = _mm_dp_ps(t4, afx, 0xff);

// grab the results from t2,t3,t4 and pack them in

// with the result from t1.

t1.m128_f32[1] = t2.m128_f32[0];

t1.m128_f32[2] = t3.m128_f32[0];

t1.m128_f32[3] = t4.m128_f32[0];

// dot product against bfx vector

t1 = _mm_dp_ps(t1, bfx, 0xff);

// grab the result out of t1

return t1.m128_f32[0];

}

The _mm_dp_ps instructions take two vectors of four values each, calculate the dot product then put the result into an output vector of four values - putting the dot product result into each of the four output spots. So, this:

__m128 t1 = _mm_load_ps(&pix[0]); // load four pixels

t1 = _mm_dp_ps(t1, afx, 0xff); // dot product against afx vector

does this:

x = pix0⋅afx0 + pix1⋅afx1 + pix2⋅afx2 + pix3⋅afx3

t1 = x,x,x,x

Weird, but that's just what it does. So, when we go to make that last vector for the final dot product, we pull the first value from each temporary vector (t1,t2,t3,t4) and stuff it into the corresponding place in t1.

t1.m128_f32[1] = t2.m128_f32[0]; // result from t2 into t11

t1.m128_f32[2] = t3.m128_f32[0]; // result from t3 into t12

t1.m128_f32[3] = t4.m128_f32[0]; // result from t4 into t13

Then we do the final d.p. using t1 and bfx and get our result!

All the additions and multiplications? Gone! Vanished into the mm_dp_ps instructions.

And how does it perform?

So far we have three variations: float input native, integer input native and integer input SIMD. Since this is not a complex calculation, we need to run it a zillion times to see how it performs. So, for 10,000,000 iterations, here are the results so far:

| Float native |

1.34s |

| Integer native |

2.57s |

| Integer SSE |

4.93s |

That second row shows that the integer to float conversion takes a long time. It counts for nearly half the time of the calculation. Ouch. Let's work on that, first.

So, here's a version using SIMD instructions to handle the integer to float conversion:

float dot_byte_sse_unpack_dot(unsigned char *p16, float *af, float *bf)

{

__m128i pix = _mm_load_si128((__m128i*)p16); // read all 16 8-bit pixels

__m128i Z = _mm_setzero_si128(); // 128 bits worth of zeros.

__m128i p16L = _mm_unpacklo_epi8(pix, Z); // unpack the 8-bit pixels to

__m128i p16H = _mm_unpackhi_epi8(pix, Z); // two sets of 16-bit pixels

__m128i p32LL = _mm_unpacklo_epi16(p16L, Z); // unpack the two sets of 16-bit

__m128i p32LH = _mm_unpackhi_epi16(p16L, Z); // pixels to four sets of

__m128i p32HL = _mm_unpacklo_epi16(p16H, Z); // four 32-bit pixels.

__m128i p32HH = _mm_unpackhi_epi16(p16H, Z);

__m128 t1 = _mm_cvtepi32_ps(p32LL); // convert the 16 32-bit integer

__m128 t2 = _mm_cvtepi32_ps(p32LH); // pixels to 16 32-bit floating

__m128 t3 = _mm_cvtepi32_ps(p32HL); // point pixels

__m128 t4 = _mm_cvtepi32_ps(p32HH);

__m128 afx = _mm_load_ps(af); // do the dot product, as before

t1 = _mm_dp_ps(t1, afx, 0xff);

t2 = _mm_dp_ps(t2, afx, 0xff);

t3 = _mm_dp_ps(t3, afx, 0xff);

t4 = _mm_dp_ps(t4, afx, 0xff);

t1.m128_f32[1] = t2.m128_f32[0];

t1.m128_f32[2] = t3.m128_f32[0];

t1.m128_f32[3] = t4.m128_f32[0];

__m128 bfx = _mm_load_ps(bf);

t1 = _mm_dp_ps(t1, bfx, 0xff);

return t1.m128_f32[0];

}

Seems like a lot of work, but when you look at what the compiler generates for " for (int i=0;i<16;i++) pix[i] = pixb[i];", this way is significantly faster.

And that does, in fact, eliminate the integer to float penalty:

| Float native |

1.34s |

|

| Integer SSE unpack |

2.28 |

Use SSE to unpack int to float |

| Integer native |

2.57s |

Convert int to float |

| Integer SSE |

4.93s |

Convert int to float, using mm_dp_ps |

This one runs faster than the native integer-to-float version. But it's an uninspiring 11% speedup. Something in there is holding me down. But what?

Looking at the assembly output, the integer unpacking SSE version (above) comes in at a svelte 50 instructions while the integer native version is over 100 instructions long. So, it's certainly not about raw instruction count. It has to be something about the particular instructions that we're using: some instructions are faster than others, are there any slow ones in there?

Here's a handy reference: Instruction tables: Lists of instruction latencies, throughputs and micro-operation breakdowns for Intel, AMD and VIA CPUs. It lists the timings for all of the instructions available on all of the various modern Intel and compatible CPUs.

I happen to have an Intel Core i7-3770 in the PC I'm typing this on, which Intel has classified under the "Ivy Bridge" code name. Scrolling down to the Ivy Bridge section in that PDF and looking at some of the instructions used by my SSE code... I see that the load and unpack and int-to-float conversion instructions are all reasonably quick. But, the centerpiece of the function, _mm_dp_ps (aka 'DPPS') - the dot product instruction itself! - is very slow. And I have five of them. But that's what does all the work! Wah!!

Well, OK. That sucks. But, a dot product is just multiplication and addition. And there's certainly a way to do a parallel multiply:

pix0,pix1,pix2,pix3 x afx0,afx1,afx2,afx3 =

pix0⋅afx0, pix1⋅afx1, pix2⋅afx2, pix3⋅afx3

We can do parallel addition, too, but we don't need that. All the things we want to sum are in the same vector, not in separate vectors which is what parallel addition works on. We have to add all those items from the result of the multiplications together, for the full dot product.

We can pull each item out of the vector, add them one at a time, kindof like we're doing down at the bottom of the functions above.

float dot_byte_sse_unpack_dot_extr(unsigned char *p16, float *af, float *bf)

{

// unpack and convert, etc..

__m128i Z = _mm_setzero_si128();

__m128i pix = _mm_load_si128((__m128i*)p16);

__m128i p16L = _mm_unpacklo_epi8(pix, Z);

__m128i p16H = _mm_unpackhi_epi8(pix, Z);

__m128i p32LL = _mm_unpacklo_epi16(p16L, Z);

__m128i p32LH = _mm_unpackhi_epi16(p16L, Z);

__m128i p32HL = _mm_unpacklo_epi16(p16H, Z);

__m128i p32HH = _mm_unpackhi_epi16(p16H, Z);

__m128 t1 = _mm_cvtepi32_ps(p32LL);

__m128 t2 = _mm_cvtepi32_ps(p32LH);

__m128 t3 = _mm_cvtepi32_ps(p32HL);

__m128 t4 = _mm_cvtepi32_ps(p32HH);

__m128 afx = _mm_load_ps(af);

__m128 bfx = _mm_load_ps(bf);

// mul input chunks times afx

t1 = _mm_mul_ps(t1, afx);

t2 = _mm_mul_ps(t2, afx);

t3 = _mm_mul_ps(t3, afx);

t4 = _mm_mul_ps(t4, afx);

// t1[0] gets the sum of t1[0],t1[1],t1[2],t1[3]

// dot product one, done!

t1.m128_f32[0] =

t1.m128_f32[0] +

t1.m128_f32[1] +

t1.m128_f32[2] +

t1.m128_f32[3];

// t1[1] gets the sum of t2[0],t2[1],t2[2],t2[3]

t1.m128_f32[1] = t2.m128_f32[0] + t2.m128_f32[1] + t2.m128_f32[2] + t2.m128_f32[3];

t1.m128_f32[2] = t3.m128_f32[0] + t3.m128_f32[1] + t3.m128_f32[2] + t3.m128_f32[3];

t1.m128_f32[3] = t4.m128_f32[0] + t4.m128_f32[1] + t4.m128_f32[2] + t4.m128_f32[3];

t1 = _mm_mul_ps(t1, bfx);

t1.m128_f32[0] = t1.m128_f32[0] + t1.m128_f32[1] + t1.m128_f32[2] + t1.m128_f32[3];

return t1.m128_f32[0];

}

That's not great. We've reduced our multiplications to 4 (_mm_mul_ps), but we've increased the additions back up to 15.

| Float native |

1.34s |

|

| Integer SSE unpack> |

2.28 |

Use SSE to unpack int to float, using mm_dp_ps |

| Integer native |

2.57s |

Convert int to float |

| Integer SSE unpack, mul |

2.75s |

Unpack with SSE, use mul and 15 additions |

| Integer SSE |

4.93s |

Convert int to float, using mm_dp_ps |

And we suffer for it.

There's an instruction that can help: it's called _mm_hadd_ps (HADDP - horizontal add). It adds adjacent pairs in a vector.

A0,A1,A2,A3 HADD B0,B1,B2,B3 =

A0+A1, A2+A3, B0+B1, B2+B3

It's not exactly what we want, we want all summed in one shot. But this it can be made to work.

float dot_byte_sse_unpack_dot_hadd(unsigned char *p16, float *af, float *bf)

{

// unpack, convert, etc.

__m128i Z = _mm_setzero_si128();

__m128i pix = _mm_load_si128((__m128i*)p16);

__m128i p16L = _mm_unpacklo_epi8(pix, Z);

__m128i p16H = _mm_unpackhi_epi8(pix, Z);

__m128i p32LL = _mm_unpacklo_epi16(p16L, Z);

__m128i p32LH = _mm_unpackhi_epi16(p16L, Z);

__m128i p32HL = _mm_unpacklo_epi16(p16H, Z);

__m128i p32HH = _mm_unpackhi_epi16(p16H, Z);

__m128 t1 = _mm_cvtepi32_ps(p32LL);

__m128 t2 = _mm_cvtepi32_ps(p32LH);

__m128 t3 = _mm_cvtepi32_ps(p32HL);

__m128 t4 = _mm_cvtepi32_ps(p32HH);

__m128 afx = _mm_load_ps(af);

__m128 bfx = _mm_load_ps(bf);

// multiply

t1 = _mm_mul_ps(t1, afx);

t2 = _mm_mul_ps(t2, afx);

t3 = _mm_mul_ps(t3, afx);

t4 = _mm_mul_ps(t4, afx);

// these will fill t1 with the results

// from the four dot products. it's an ugly

// series of additions, but it works.

t1 = _mm_hadd_ps(t1, t2);

t3 = _mm_hadd_ps(t3, t4);

t1 = _mm_hadd_ps(t1, t3);

// final dot product

t1 = _mm_mul_ps(t1, bfx);

t1 = _mm_hadd_ps(t1, t1);

t1 = _mm_hadd_ps(t1, t1);

return t1.m128_f32[0];

}

| Integer SSE unpack, mul, HADD |

1.08s |

Unpack with SSE, using mul and HADD |

| Float native |

1.34s |

|

| Integer SSE unpack> |

2.28 |

Use SSE to unpack int to float using mm_dp_ps |

| Integer native |

2.57s |

Convert int to float |

| Integer SSE unpack, mul |

2.75s |

Unpack with SSE, using mul and 15 additions |

| Integer SSE |

4.93s |

Convert int to float, using mm_dp_ps |

Now that's what I'm talking about! More than twice as fast as my real benchmark, and even faster than the native float input version! Ultimate victory!

Maybe they'll speed up the _mm_dp_ps instruction up in future CPUs. But until then, this does the job pretty well.